Microsoft CEO Satya Nadella tackles deepfake concerns, emphasizing AI ethics and online safety—insight into Microsoft’s digital responsibility initiatives.

Table of Contents

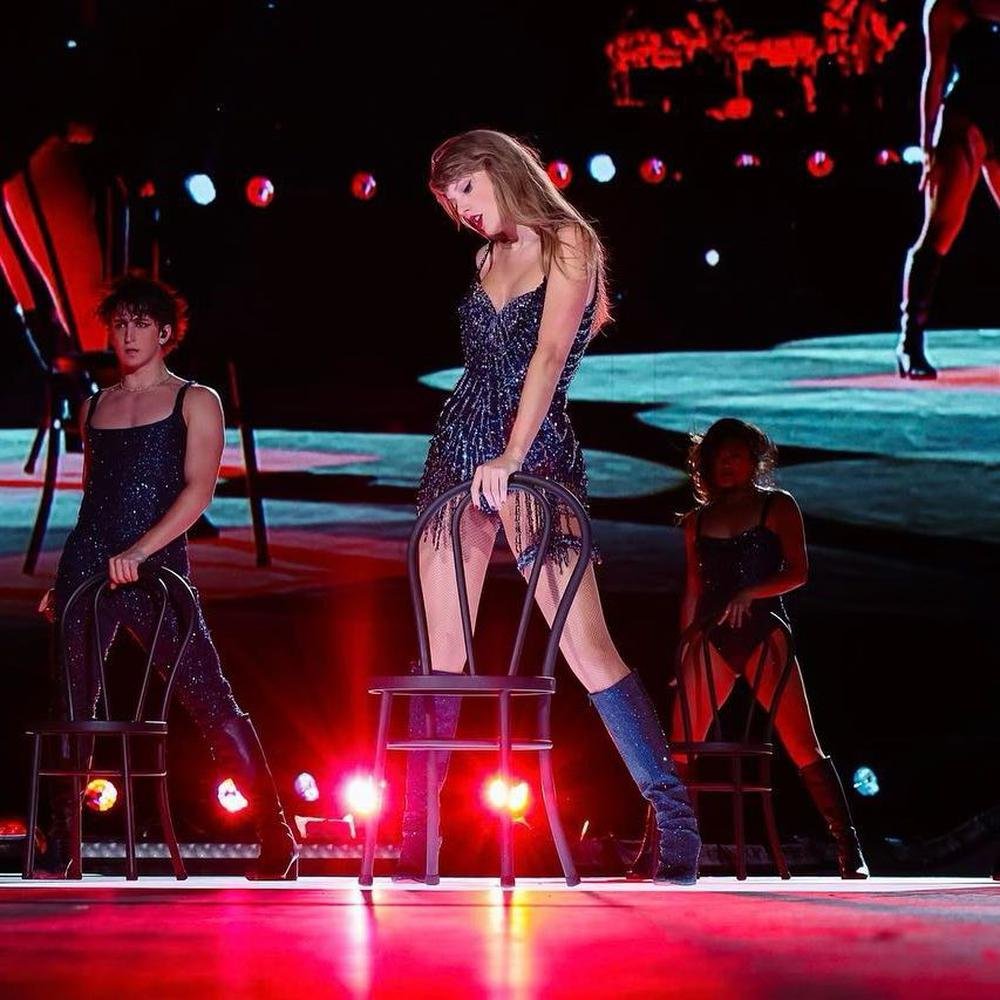

Taylor Swift

Prompt Response to Digital Misuse

In an urgent response to a growing digital threat, Microsoft’s CEO Satya Nadella has stressed the need for quick action against nonconsensual deepfake images.

This comes after AI-created fake nude pictures of Taylor Swift went viral, causing public uproar.

Satya Nadella’s Concern Over Online Safety

During an exclusive interview with NBC News’s Lester Holt, Nadella spoke about the distressing incident involving Swift.

The deepfake images, which attracted over 27 million views on an X platform, were swiftly removed following a flood of reports from Swift’s fans.

Nadella emphasized, “Yes, we have to act. We all win when our online world is safe.” He pointed out the responsibility everyone shares in making the internet a secure space for both those who create content and those who consume it.

While Platform X did not comment on the issue, Swift’s representative did not publicly discuss it.

Microsoft’s Role in AI and Online Safety

Microsoft is familiar with artificial intelligence. The tech giant is a major investor in OpenAI, the creators of ChatGPT, and has developed its own AI tools, like Copilot, an AI chatbot in Bing.

Nadella highlighted Microsoft’s commitment to setting up safety measures around technology to ensure that more safe content is produced.

The CEO also discussed the importance of global agreement on certain norms, especially when it involves the law, law enforcement, and technology platforms. He believes this collaboration can achieve more in governing the digital space than is often recognized.

Tracing the Deepfake Origins

An investigation by 404 Media suggested that the deepfake images of Swift originated from a Telegram group chat, where users claimed they created them using Microsoft’s AI tool, Designer.

While NBC News could not confirm these claims independently, Microsoft took the allegations seriously.

In response to the report, Microsoft confirmed they were looking into the issue and would act accordingly.

The company clarified that its Code of Conduct forbids using its tools to create adult or nonconsensual content.

Microsoft also pointed out its ongoing efforts in developing safety features and content filters that align with its responsible AI principles, aimed at reducing misuse and creating a safer user environment.

Conclusion

This incident illuminates AI technology’s ethical challenges and underscores the need for responsible use guidelines.

Microsoft’s quick response and commitment to digital safety demonstrate the crucial role of tech companies, law enforcement, and the global community in maintaining a respectful and safe online space.